Understanding CISSP Domain 3: Security Architecture and Engineering - Part 2

In part 1 of Domain 3, Security Architecture and Engineering, we covered secure design principles, security models, structured approaches to selecting controls, the capabilities of system components, and the mitigation of system vulnerabilities.

In Part 2, we delve into objective 3.6, selecting cryptographic solutions. Let’s dive into crypto and cover the material following the ISC2 exam outline.

3.6 - Select and determine cryptographic solutions

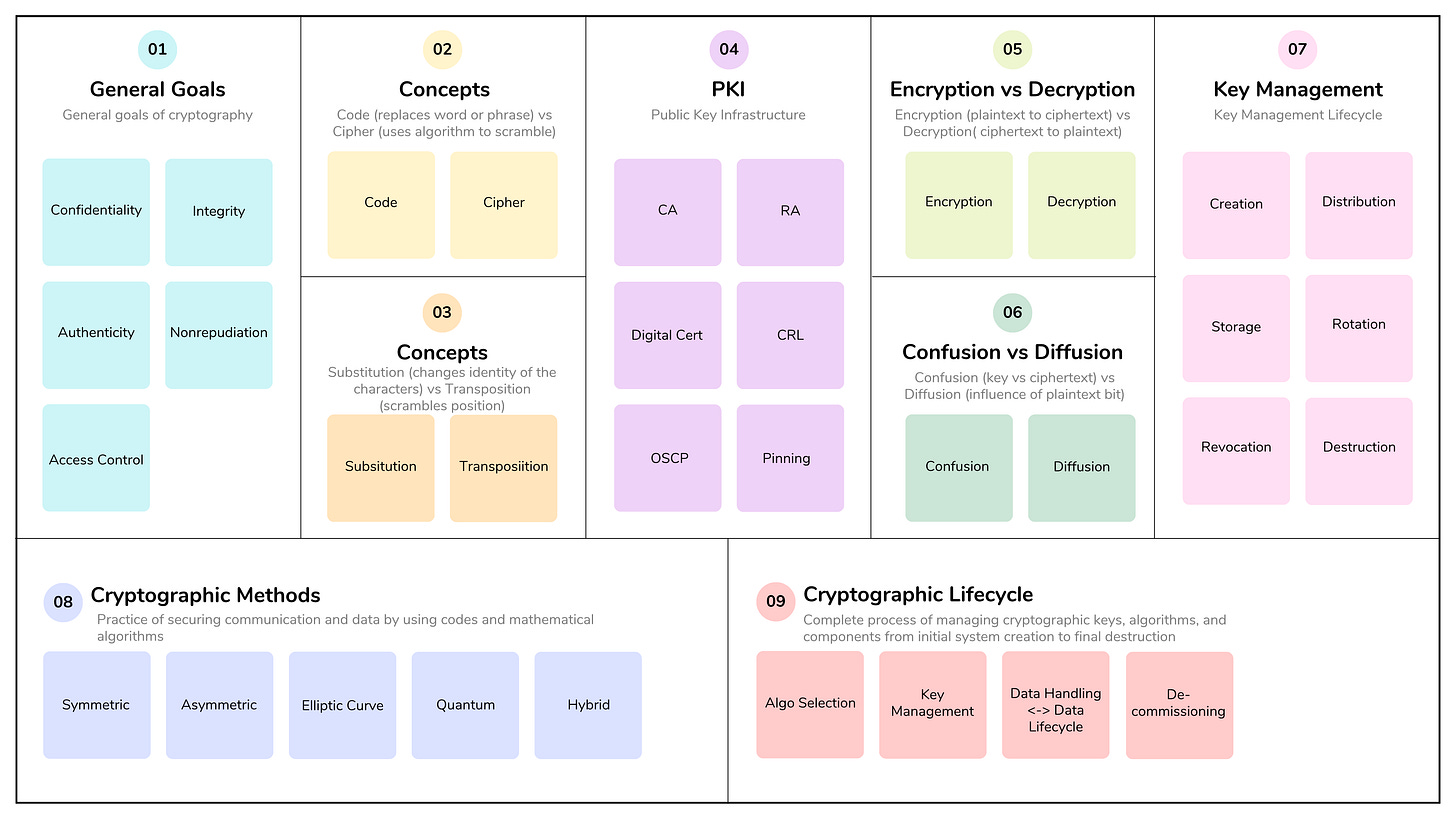

Objective 3.6 concerns the secure management of cryptographic keys and the selection of algorithms, including a review of cryptographic methods such as symmetric, asymmetric, elliptic curve, and quantum cryptography, as well as public key infrastructure (PKI). We also highlight effective key management, which is essential to ensuring the confidentiality, integrity, and availability (CIA) of data protected through cryptographic means.

Cryptographic life cycle (e.g., keys, algorithm selection)

The cryptographic life cycle is the complete process of managing cryptographic keys, algorithms, and components from initial system creation to final destruction. Because technological improvements continue at an increasing pace, it’s important to make decisions about cryptographic algorithm selection, the appropriate key lengths for each algorithm, and the best implementation to keep data safe for the time required to meet business requirements.

Goals of the Cryptographic Life Cycle

The ultimate goal is to maintain the integrity and security of a cryptographic system at every stage, ensuring data confidentiality, integrity, and availability. This goal is achieved by ensuring that the best decisions are made and practices used to provide adequate security against technological improvements and emerging threats:

Algorithm Selection: Choosing the right type of cryptography (symmetric, public key, hashing) and the specific algorithm and key length for the security needs and future protection of data based on business requirements.

Key Management: Implementing procedures to manage keys securely at every stage. This includes establishing policies for key rotation, access control, and auditing.

Data Handling: The cryptographic lifecycle is intrinsically linked to the data lifecycle, encompassing creation, storage, use, sharing, archiving, and disposal. Each stage presents unique security challenges, requiring appropriate controls to prevent compromise, such as strong algorithms, secure key exchange methods, and robust destruction techniques.

Decommissioning: Tracking the useful life and ability to protect sensitive data, and safely retiring systems or keys as appropriate. This includes ensuring the implementation of new and safe methods or keys to protect data from retired systems.

Note that NIST has defined terms to help describe the appropriateness and level of algorithms and key lengths, included here for completeness:

Approved: an algorithm that is specified as a NIST or FIPS recommendation.

Acceptable: an algorithm combined with a suitable key length is safe today.

Deprecated: an algorithm with a particular key length may be used, but it brings some risk.

Restricted: use of an algorithm and/or key length is deprecated and should be avoided.

Legacy: the algorithm and/or key length is outdated and should be avoided when possible.

Disallowed: an algorithm and/or key length is no longer allowed for the indicated use.

Cryptographic methods (e.g., symmetric, asymmetric, elliptic curves, quantum)

The dictionary definition of cryptography is the “art or practice of writing or solving codes.” In terms of the CISSP, it is the practice of securing communication and data by using codes and mathematical algorithms to encode and decode information, ensuring privacy and protection against unauthorized access. It is fundamental to cybersecurity and is used to protect sensitive information like credit card numbers, passwords, and private messages.

The term originates from the Greek words kryptós (”hidden”) and graphein (”to write”). Historically, cryptography focused on encrypting text messages, but today it is a fundamental pillar of modern cybersecurity, securing digital information that is at rest, in transit, and in use.

Using a cryptographic system, one or more of the following goals can be met:

Confidentiality: Cryptography ensures that information is only accessible to authorized users through encryption.

Integrity: Integrity guarantees that data has not been altered during transmission or storage. Cryptography ensures this with hashing and message authentication.

Authenticity: Authenticity verifies the identity of the sender, the recipient, or the origin of data. Cryptography achieves this using digital signatures and Public Key Infrastructure (PKI).

Nonrepudiation: Non-repudiation provides irrefutable proof that a specific action occurred, preventing a party (at origin or delivery) from denying their involvement, achieved through digital signatures.

Access Control: Cryptography can act as an enabling technology for access control. Access to data is controlled by controlling access to encryption keys. By managing the distribution, storage, and lifecycle of keys, administrators can determine who can decrypt data and when. Digital certificates and secure protocols like TLS can be used to authenticate users and systems, ensuring that only authorized entities can access resources.

Concepts and terminology

Cryptanalysis is the study of techniques for deciphering encrypted messages, codes, and ciphers without having access to the secret information—such as the key—that is normally required. Essentially, it involves analyzing cryptographic systems to find weaknesses.

Encryption and Decryption: Encryption is the process of converting readable information, called plaintext, into an unreadable, scrambled form, called ciphertext. Decryption is the reverse process that converts unreadable ciphertext back into its original readable plaintext.

Substitution and transposition are two fundamental techniques used to create a cipher. The key distinction is in how they alter the plaintext message:

Substitution changes the identity of the characters. A substitution cipher replaces each character (or group of characters) in the plaintext with a different character, number, or symbol based on a key. The position of each character remains the same. Examples include the Caesar Cipher (see below).

A transposition cipher scrambles the positions of plaintext characters without changing their values. The resulting ciphertext is a rearrangement of the original plaintext. An example is the Rail fence (zigzag) cipher.

Code and Cipher: A code replaces a meaningful word or phrase with a substitute word, number, or symbol. Codes operate on entire units of meaning, not individual letters or bits. A cipher uses a specific algorithm to scramble individual letters or bits of a message. A key is needed to decrypt the message.

Examples of ciphers include:

Stream: A stream cipher is a symmetric encryption algorithm that encrypts data one bit or one byte at a time. It uses a long, pseudo-random stream of bits, called a keystream, which is combined with the plaintext using the XOR operation to produce the ciphertext.

Block: Encrypts data in fixed-size blocks. To cover data that isn’t a perfect multiple of the block size, padding is often used. Most modern symmetric ciphers are block ciphers.

Caesar: A simple substitution cipher where each letter is shifted a fixed number of places. You should recognize this as a historical, easily broken example of a substitution cipher.

Vigenére: A more complex and early example of a multi-alphabet substitution cipher that improved on simple substitution, but is still vulnerable to modern cryptanalysis.

One-time Pad: The only theoretically unbreakable cipher, but only if its strict requirements are met: a truly random key, used only once, and at least as long as the message. Understand its practical challenges related to key distribution and management.

Trust and Assurance: Trust is the acceptance that a particular entity (network, system, person, or process) is legitimate and that its claims and actions are reliable. Trust is not absolute. It is a judgment that is based on specific evidence (evaluation, testing, and validation) and the applied policies surrounding the system. Assurance is the measure (or degree) of confidence that the security features of a cryptographic system are working correctly and effectively. It is the evidence and evaluation that prove a system is worthy of the trust placed in it.

Confusion and Diffusion are two fundamental principles that are used together to create strong, secure ciphers. The goal is to hide the relationship between the plaintext, ciphertext, and the key, making it extremely difficult for an attacker to break the encryption.

Confusion is the principle of making the relationship between the key and the ciphertext as complex as possible. This is typically achieved through substitution, where each bit or block of plaintext is replaced with another, different value.

Diffusion is the principle of spreading the influence of a single plaintext bit over as much of the ciphertext as possible. This helps to hide any statistical patterns or redundancies in the original plaintext.

A zero-knowledge proof (ZKP) is a cryptographic method where one party (“prover”) can prove a statement (e.g. piece of information or a secret) is true to another party (“verifier”) without revealing any additional information beyond the truth of the statement. For instance, a ZKP could prove you meet an age requirement without revealing your date of birth, or verify your identity without revealing personal information.

Work factor is an estimate of the effort or time to break a cryptographic system. The greater the system's security, the higher the work factor. In other words, a higher work factor indicates a more secure system, as it requires more resources and makes an attack less feasible.

Work factor isn’t a single, fixed number but an estimation influenced by several variables:

Key length: In general, longer keys provide a higher work factor.

Cryptographic algorithm: The specific algorithm used affects its resistance to different forms of cryptanalysis, and thus the associated work factor.

Adversary’s resources: The work factor is dependent on the attacker’s capabilities, including their computing power and financial resources.

In general, the work factor should match the value of the asset to be protected and the time required to meet business requirements.

Kerckhoff’s Principle (AKA Kerckhoff’s assumption): a cryptographic system should be secure even if everything about the system, except the key, is public knowledge.

Key space is the set of all possible keys that can be used with a specific cryptographic algorithm. The size of the key space is the total number of unique keys available. A larger key space is a critical factor in a cryptographic algorithm’s strength because it increases its resistance to brute-force attacks.

Symmetric Cryptography

Symmetric cryptography is an encryption method that uses a single, shared secret key for both encrypting plaintext and decrypting ciphertext. It is also known as secret-key, single-key, or shared-key encryption. The core principle is that the sender and receiver possess identical copies of the key and must keep it confidential for the encryption to be effective.

Advantages:

Speed and efficiency: Symmetric algorithms are significantly faster and more computationally efficient than asymmetric ones, because the mathematical operations are less complex. They are ideal for quickly encrypting large volumes of data.

Strong security: Modern symmetric ciphers, like the Advanced Encryption Standard (AES), are considered strong and secure. A 256-bit AES key is considered resistant to all known practical attacks, including brute-force.

Disadvantages:

Key distribution problem: The primary drawback is securely sharing the secret key between parties, especially over an insecure channel. If the key is intercepted during distribution, the entire system is compromised.

Scalability issues: In large networks with many users, key management becomes very complex. Since a unique key is ideally needed for every pair of communicating users, the number of keys to manage grows exponentially.

The total number of keys required to connect n parties using symmetric cryptography is given by this formula: n(n - 1)) / 2

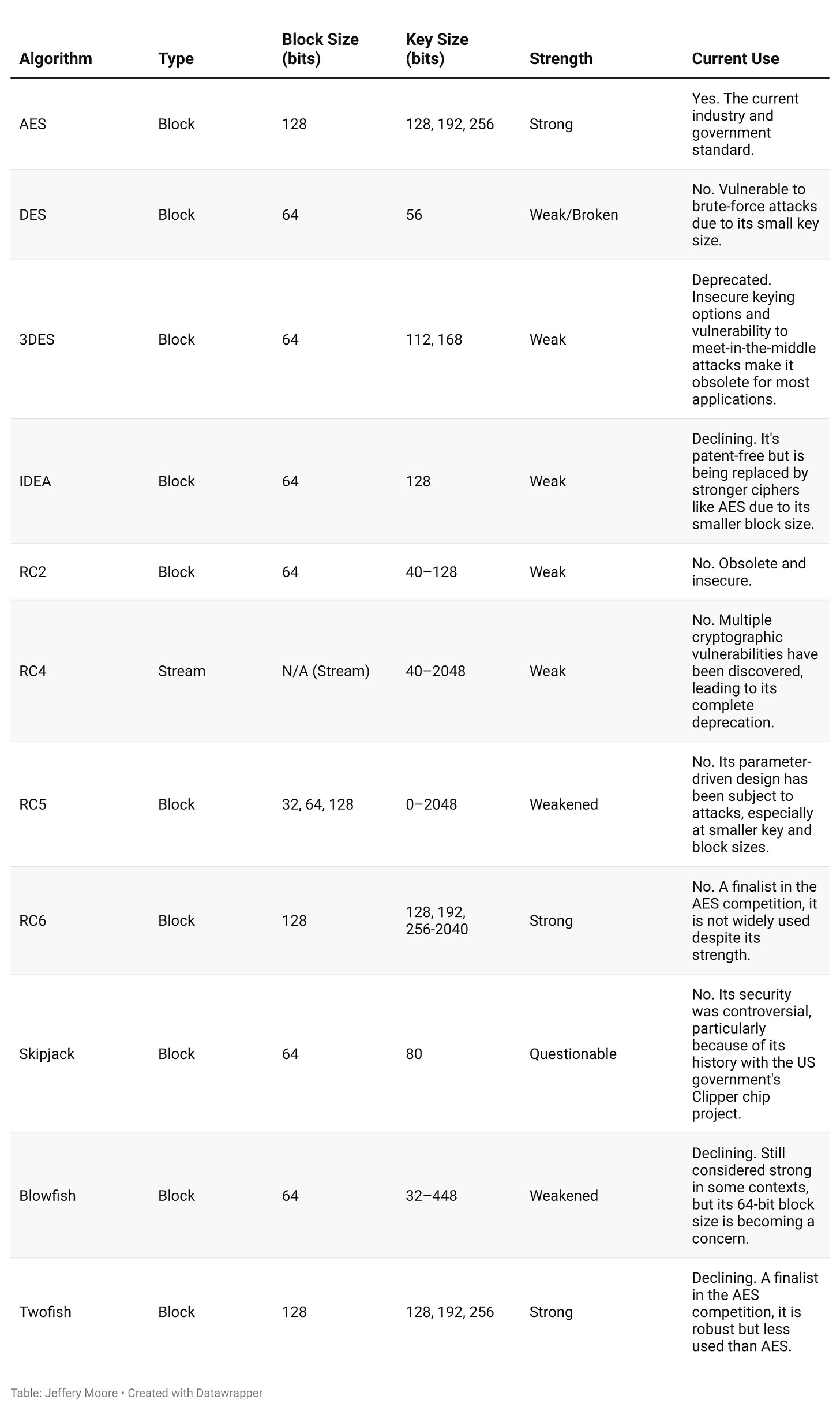

Table of Symmetric Algorithms

Modes of Operation

A cryptographic mode of operation is an algorithm that defines how to apply a block cipher to securely encrypt amounts of data larger than a single block. Think of a block cipher, like AES, as a function that scrambles a single, fixed-size unit of information. A mode of operation is the specific method or recipe for how to use that function repeatedly and securely on a larger, variable-length message.

Electronic Code Book (ECB) mode: the simplest and weakest of the modes, processing in 64-bit blocks. Each plaintext block is encrypted separately, but in the same way.

Advantages: ECB is fast and allows blocks to be processed simultaneously.

Disadvantages: any plaintext duplication would produce the same ciphertext.

Cipher Block Chaining (CBC) mode: a block cipher mode of operation that encrypts plaintext by using an operation called XOR (exclusive-OR). XORing a block with the previous ciphertext block is known as “chaining,” meaning that the decryption of a block of ciphertext depends on all the preceding ciphertext blocks. CBC uses an Initialization Vector (IV), a random value or “nonce” shared between the sender and receiver.

Advantages: CBC uses the previous ciphertext block to encrypt the next plaintext block, making it harder to deconstruct. The XORing process prevents identical plaintext from producing identical ciphertext. A single bit error in a ciphertext block affects the decryption of that block and the next, making it harder for attackers to exploit errors.

Disadvantages: CBC is slower than other modes, because the blocks must be processed in order, not simultaneously. CBC is also vulnerable to POODLE and GOLDENDOODLE attacks.

Cipher Feedback (CFB) mode: CFB is the streaming version of CBC. It is similar to CBC in that it uses an IV and the cipher from the previous block, which can cause errors to propagate. The main difference between the CFB and CBC is that with CFB, the cipher from the previous block is encrypted first, then XORed with the current block.

Advantages: CFB is considered to be faster than CBC, even though it’s also sequential.

Disadvantages: if there’s an error in one block, it can carry over to the next.

Counter (CTR) mode: The key feature of CTR is that it allows parallel encryption and decryption without chaining. It uses a counter function to generate a nonce value for each block’s encryption.

Advantage: CTR mode is fast and considered to be secure; errors do not propagate.

Disadvantage: lacks integrity, so hashing is needed.

Counter with Cipher Block Chaining Message Authentication Code (CCM) mode: CCM uses counter mode, so there is no error propagation, and it uses chaining, so it cannot run in parallel. MAC, or message authentication code, provides authentication and integrity.

Advantages: no error propagation, provides authentication and integrity.

Disadvantages: cannot be run in parallel.

Galois/Counter (GCM) mode: is an authenticated encryption mode of operation for symmetric-key block ciphers, most commonly used with the Advanced Encryption Standard (AES). GCM is a combination of two distinct operations:

Counter Mode (CTR), which is used for confidentiality (encryption).

Galois Message Authentication Code (GMAC), which is a universal hash function, provides authenticity and integrity.

Advantages: Extremely fast, GCM is recognized by NIST and used in the IEEE 802.1AE standard.

Disadvantages: While highly performant on modern hardware with dedicated acceleration, it can have performance disadvantages on resource-constrained devices.

Output Feedback (OFB) mode: OFB turns a block cipher into a synchronous stream cipher. Based on an IV and the key, it generates keystream blocks, which are then XORed with the plaintext. As with CFB, the encryption and decryption processes are identical, and no padding is required.

Advantages: OFB mode doesn’t use a chaining function, so errors do not propagate. It doesn’t require a unique seed value or nonce per message, simplifying the management and generation of nonces. It’s also resistant to replay attacks.

Disadvantages: OFB offers no data integrity protection, is vulnerable to IV management issues, and lacks parallelization capabilities.

Asymmetric Cryptography

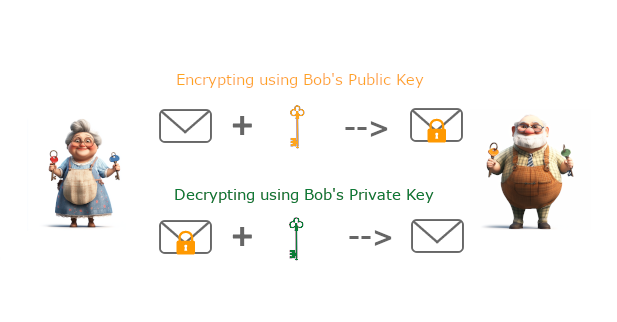

Asymmetric cryptography (also known as public-key cryptography) is a method of encrypting and decrypting data using two keys: a public and a private key.

Public key: one part of the matching key pair; the public key can be shared or published. It is used to encrypt data or messages. You can think of a public key like a public mailbox where anyone can drop off a letter, but only you (the mailbox owner) has the key to open it.

Private key: the other part of a matching key pair, the private key is kept secret by the owner. The public and private keys are mathematically linked, but one cannot be derived from the other.

If Betty and Bob want to exchange encrypted messages, they start by exchanging their public keys: Betty gives Bob her public key, and Bob gives Betty his.

Now, Betty can encrypt her message with Bob’s public key. She sends the message to Bob, who decrypts it with his private key, allowing him to unlock and read it. Only Bob can decrypt the message.

In the image above, Betty takes her original message, encrypts it with Bob’s public key. Bob can then decrypt the message using his private key.

Common Asymmetric Algorithms

RSA (Rivest-Shamir-Adleman): RSA is one of the most widely used public-key algorithms. Its security relies on the computational difficulty of factoring very large numbers. Widely used in SSL/TLS, secure email (S/MIME), and digital certificates.

ElGamal: a public-key cryptosystem based on the Diffie-Hellman key exchange. Its security relies on the difficulty of computing discrete logarithms. Used in some older versions of PGP and GNU Privacy Guard (GnuPG), though less common than RSA today.

Elliptical Curve Cryptography (EEC): Used for encryption (EC-ElGamal), key exchange (ECDH), and digital signatures (ECDSA). Its security is based on the elliptic curve discrete logarithm problem (ECDLP), which is considered more difficult to solve than the factoring problem (used by RSA) for a given key size. ECC is gaining popularity in modern protocols for mobile and web applications, including Bitcoin and TLS 1.3.

Diffie-Hellman (DH): While DH is not a complete encryption system and does not provide authentication or non-repudiation on its own, it allows two parties to establish a shared secret key over an insecure communication channel. Like ElGamal, it relies on the computational difficulty of the discrete logarithm problem.

Hybrid Cryptography

Hybrid cryptography is a method that combines the speed and efficiency of symmetric-key cryptography with the secure key exchange capabilities of asymmetric (public-key) cryptography. All practical implementations of public-key cryptography today use this hybrid approach.

The process works as follows:

Session key generation: The sender creates a random, single-use symmetric key, also known as a session key.

Data encryption: The sender uses this fast session key to symmetrically encrypt the bulk of the data.

Key encryption: The sender encrypts the small session key using the recipient’s public key (asymmetric encryption).

Transmission: The sender transmits both the symmetrically encrypted data and the asymmetrically encrypted session key to the recipient.

Decryption: The recipient uses their private key to decrypt the session key. Then, with the now-revealed symmetric key, they can efficiently decrypt the large encrypted data.

Hybrid cryptography is the foundation for secure communication across many modern applications and protocols, including:

Secure Sockets Layer / Transport Layer Security (SSL/TLS) for web browsing.

Secure email protocols such as PGP and S/MIME.

Secure storage, including cloud-based solutions and encrypted file systems.

Encrypted messaging services like WhatsApp and Signal use hybrid cryptography to establish a secure channel.

Message Integrity Controls

A Message Integrity Control (MIC) helps ensure the integrity of a message from the time it’s created until the recipient reads it. The control provides assurance to the recipient of the message’s integrity and, depending on the method used, can also guarantee its authenticity. A MIC is typically a cryptographic hash (a “message digest”) of the message data alone.

The general process for using a MIC involves the following steps:

Generation: The sender uses a mathematical function to produce a short, fixed-length value (often called a digest, tag, or checksum) from the message and, sometimes, a secret key.

Transmission: This value is appended to or sent alongside the message.

Verification: The recipient receives the message and the associated MIC. They use the same mathematical function on the received message to generate their own MIC.

Validation: The recipient compares their computed MIC with the one received from the sender. If the two values match, the recipient can be confident that the message has not been altered.

Some examples and variations of MICs include:

Message Authentication Code (MAC): A MAC is a type of MIC that uses a symmetric (secret) key to provide both integrity and authenticity.

How it works: A sender and receiver share a secret key. The sender combines the message with the key and uses a cryptographic function to produce a tag. The receiver uses the same key to verify the tag.

Security benefits: Because the key is secret, only a party with access to it can generate a valid MAC. This prevents a passive eavesdropper from altering the message and creating a new, valid MAC. It provides both integrity and assurance that the message originated from a party with the secret key.

Example: Hash-based Message Authentication Code (HMAC), which uses a secret key in conjunction with a cryptographic hash function like SHA-256. HMAC is widely used in protocols such as IPsec, SSH, and TLS.

Checksum (unkeyed hash function): While technically a form of integrity control, a simple checksum or cryptographic hash function used alone does not provide authentication.

How it works: The sender computes a hash of the message and sends it along. The receiver computes the hash of the received message and compares it to the hash received.

Security weakness: An attacker can easily modify the message and compute a new, valid hash, making this method susceptible to tampering if the attacker intercepts and modifies the transmission.

Usage: Used when the threat of a malicious attacker is low, such as to detect accidental data corruption during transmission or storage. For security-critical applications, keyed methods like MACs or digital signatures are required.

Cyclic Redundancy Check (CRC): A CRC is a specific, more robust type of checksum. It is a non-cryptographic algorithm used to detect accidental errors in digital data. It is widely used in data storage and network communications to ensure data integrity.

Hash Functions

A hash function is a one-way mathematical function that takes an input (or message) of any size and produces a fixed-size string of characters called a hash value, hash code, or digest. Note that in contrast, encryption is a two-way process that scrambles readable data (plaintext) into an unreadable format (ciphertext), which can be reversed with a corresponding key.

Hash functions are used for data integrity verification, password hashing, and digital signatures.

A good cryptographic hash function must satisfy several key requirements to be considered secure and reliable for modern applications. These properties ensure that it is a one-way function that provides data integrity and is resistant to malicious attacks:

1. Fixed Length: A message of any length should produce a fixed-length message digest.

2. Deterministic: For any given input message, a hash function must use the entire message and always produce the same hash output. This is crucial for verifying data, as a change to the original input should yield a different hash.

3. Pre-image resistance (One-way property): Given a hash output, it should be computationally infeasible to find the original input message that produced it. This makes it impossible for an attacker to recover a password from its stored hash, for instance.

4. Collision resistance: Two different inputs should never produce the same hash output.

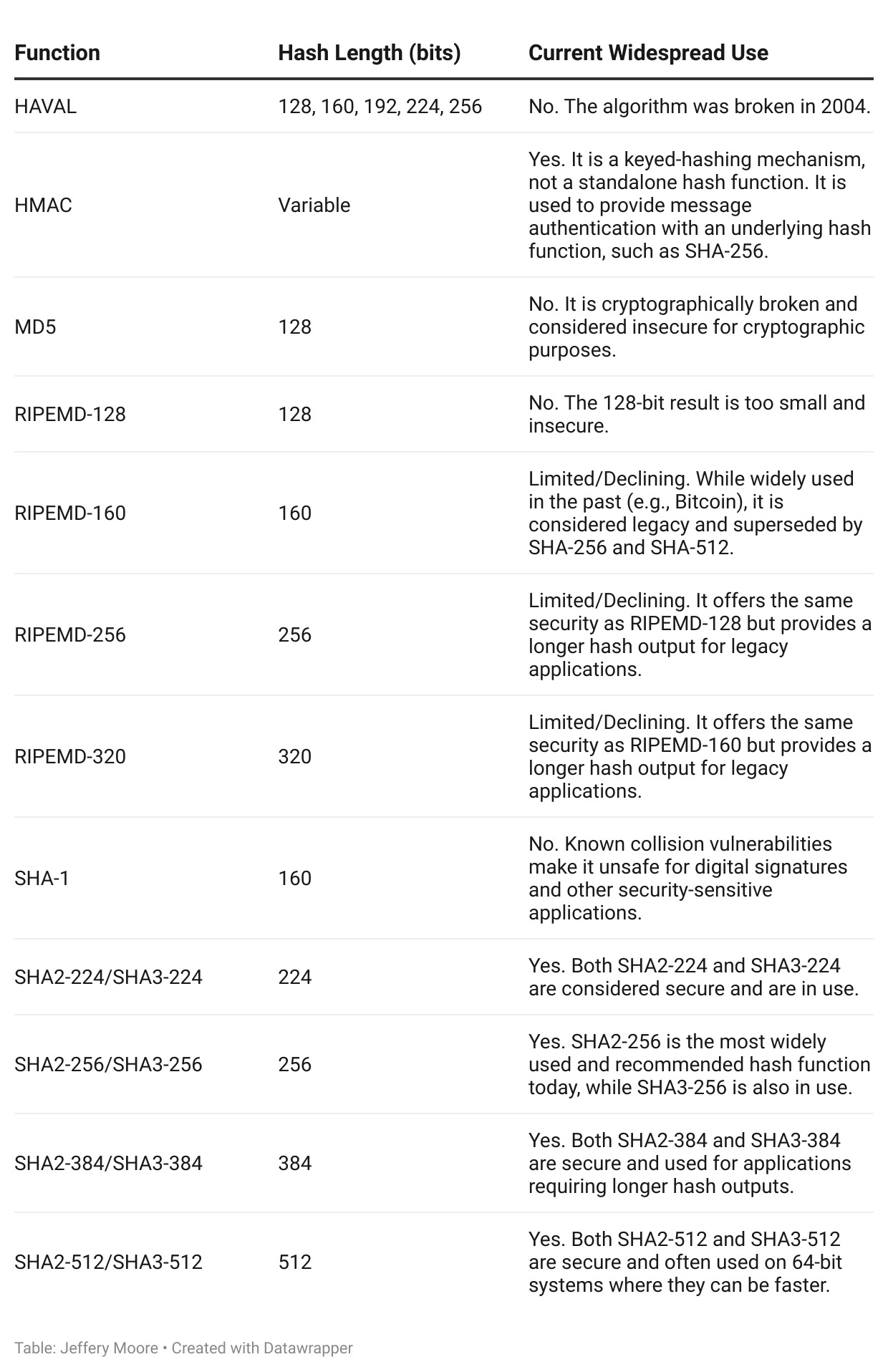

Hash Functions Table

A few things to note about these functions:

HMAC is not a hash function: HMAC is a keyed-hashing mechanism used to verify both data integrity and message authenticity. It uses a secret key with an underlying cryptographic hash function, such as SHA2-256.

Hash length and security: A longer hash length generally means greater security, making it exponentially harder to find collisions or reverse the hash. This is why shorter hash lengths, such as MD5 (128 bits) and SHA-1 (160 bits), are no longer considered secure for cryptographic use.

RIPEMD variants: While some RIPEMD variants (such as RIPEMD-160) have not been fully broken, they are less popular than the SHA family and are considered legacy, particularly given recent academic analyses that have challenged their security.

SHA-2 vs. SHA-3: Both SHA-2 and SHA-3 are modern, secure families of hash functions. SHA-3 offers a different design that is immune to some attacks that could theoretically affect older hash functions. SHA-256 remains the most prevalent standard today, but SHA-3 offers a robust and modern alternative.

Quantum

Quantum cryptography uses principles of quantum mechanics, such as superposition and entanglement, to create cryptographic systems that are theoretically unbreakable.

Post-quantum cryptography (PQC) develops new mathematical algorithms that can run on classical computers but are resistant to attacks from both classical and powerful quantum computers. It is often called “quantum-safe” or “quantum-resistant.”

How it works: PQC focuses on replacing vulnerable public-key encryption methods like RSA and ECC, which quantum computers could easily break using algorithms like Shor’s (a quantum algorithm for integer factorization). PQC algorithms are based on different, complex mathematical problems. For example, those involving lattices (grids of evenly-spaced points extending infinitely), hash functions, and error-correcting codes—that are believed to be intractable for both quantum and classical machines.

Standards: The U.S. National Institute of Standards and Technology (NIST) has led a multi-year competition to evaluate and standardize PQC algorithms.

Advantage: PQC can be implemented on existing hardware and software infrastructure, making it a scalable, cost-effective, and practical solution for the impending quantum threat.

Public key infrastructure (PKI) (e.g., quantum key distribution)

A Public Key Infrastructure (PKI) is a system that uses policies, procedures, software, and hardware to manage digital certificates and encryption keys. It provides a foundation for secure communications by ensuring the confidentiality, integrity, authenticity, and non-repudiation of data in activities such as web browsing, email, and digital signatures.

Key components of a PKI

Certificate Authority (CA): A trusted third-party organization that issues, manages, signs, and revokes digital certificates. The CA’s own private key is used to sign certificates, establishing a “chain of trust”.

Root CA: A self-signed certificate at the top of the PKI hierarchy. The security of the entire PKI relies on its integrity, so root CAs are often kept offline in highly secure environments.

Intermediate CA: Subordinate CAs derive authority from the root CA and issue certificates to end-entities (users and devices). This structure protects the root CA from compromise.

Registration Authority (RA): An entity that verifies the identity of the user or device requesting a certificate. Once an identity is confirmed, the RA forwards the request to the CA for issuance of the certificate.

Digital Certificates: The core of PKI, these electronic credentials bind a user’s identity to their public key. They are tamper-resistant and contain information like the owner’s name, public key, validity period, and the CA’s digital signature.

Certificate Revocation List (CRL) or Online Certificate Status Protocol (OCSP): Lists or services that the CA provides to inform parties which certificates have been revoked before their expiration date. A Validation Authority (VA) can operate this service.

Certificate Revocation List (CRL): a list maintained by a Certificate Authority (CA) that contains the serial numbers of digital certificates that have been revoked before their scheduled expiration date.

Online Certificate Status Protocol (OCSP): a real-time method for verifying the status of an X.509 (e.g., SSL/TLS) digital certificate.

In OCSP Stapling, the server that hosts the certificate performs the OCSP check itself. It periodically queries the OCSP Responder and “staples” a signed, time-stamped OCSP response to its TLS handshake with the client.

Certificate pinning is a method in deprecation in which a certificate, once seen by a host, is pinned to that host. Instead of relying solely on the public key infrastructure (PKI) model, where any certificate signed by a trusted CA is accepted, the client only accepts the “pinned” certificate or key.

Certificate Database: A storage area for issued certificates and their metadata.

Certificate Management System: The hardware and software used to manage the delivery, access, and lifecycle of certificates.

Policies and Procedures: The rules that govern the PKI, including the Certificate Policy (CP) and Certificate Practice Statement (CPS). These define the purpose, issuance, and trustworthiness of the certificates.

Certificate Types

Digital certificates, based on the X.509 standard, come in various types, each designed for a specific purpose.

By function:

TLS/SSL Certificate: Used to secure communication between a server (e.g., a web server) and a client (e.g., a web browser), creating an encrypted HTTPS connection.

Authentication: Verifies the server’s identity to the client.

Encryption: Encrypts data in transit.

Levels of Validation: DV (Domain Validated), OV (Organization Validated), and EV (Extended Validation).

Code Signing Certificate: Ensures the authenticity and integrity of software code.

Developer Identity: Verifies that the code came from the specified developer.

Integrity Check: Confirms the code has not been altered or tampered with since being signed.

S/MIME Certificate: Secures email communication.

Digital Signature: Authenticates the sender’s identity and confirms the message’s integrity.

Encryption: Encrypts email content so only the intended recipient can read it.

Client Authentication Certificate: Authenticates a user or device to a server, providing a higher level of security than traditional passwords. Also enables mutual authentication.

Enhanced Security: Requires possession of a certificate, which is harder to compromise than a password.

Passwordless Login: Can be used to log in automatically to a service or network.

Document Signing Certificate: Creates legally binding digital signatures for documents.

Identity Verification: Binds the signature to a verified individual or organization.

Tamper Protection: Shows if the document has been altered after it was signed.

By position:

Root Certificate: The ultimate trust anchor in a PKI. It is self-signed and held by a trusted CA.

Self-Signed: Its issuer and subject are the same.

Highest Trust: All other certificates derive their trustworthiness from the root certificate.

Offline Storage: The private key is kept highly secure and often offline.

Intermediate Certificate: Links the end-user certificate to the root certificate, forming a chain of trust.

Signed by Root CA: The intermediate certificate is signed by the root CA.

Enhanced Security: Protects the root CA’s key from frequent use, thereby minimizing risk.

Issuer of Leaf Certificates: The intermediate CA signs end-entity certificates.

End-Entity Certificate (Leaf): The final certificate in the chain of trust, issued to a specific user, server, or device.

Signed by Intermediate CA: Traces its trust back to the root.

Specific Use: Used for a single purpose, such as securing a specific website’s domain (in the case of a TLS/SSL certificate).

Other types:

Self-Signed Certificate: Created and signed by the same entity that it identifies. It provides encryption but lacks the third-party trust of a CA-signed certificate.

Wildcard Certificate: A type of TLS/SSL certificate that can secure a single domain and an unlimited number of its subdomains.

Multi-Domain (SAN) Certificate: Secures multiple, distinct domain names with a single certificate.

Quantum Key Distribution (QKD) is a secure method of key exchange that relies on the components of quantum mechanics.

How it works: QKD enables two parties (e.g., Betty and Bob) to establish a shared secret key by transmitting photons. An eavesdropper (Eve) who attempts to intercept or observe these photons will disturb their quantum state.

Eavesdropping detection: The disturbance caused by Eve’s observation is instantly detectable by Betty and Bob, who will then discard the compromised key and generate a new one.

Limitation: A major drawback is that QKD is hardware-dependent, requires specialized equipment (fiber optic links or satellite relays), and is currently limited by distance and cost. It is primarily a solution for secure key exchange, not bulk data encryption.

Key Management

Key management is the full set of processes and procedures for managing cryptographic keys throughout their lifecycle. This includes generating, distributing, storing, using, rotating, revoking, archiving, and ultimately destroying keys in a secure and controlled manner. It encompasses everything required to protect keys from unauthorized access, loss, or misuse.

The security of any cryptosystem is fundamentally tied to the security of its keys. If the keys are compromised, the encryption they protect is rendered useless, regardless of how strong the algorithm is. Remember Kerckhoffs’s Principle — it highlights why key management is not a minor operational detail but a critical, foundational security practice.

Core key management practices:

Key Creation/Generation: The process of creating strong, unique, and unpredictable keys that are computationally difficult to crack. Key sizes should be determined by the cryptographic algorithm and the business security requirements of the data to be protected.

Key Distribution: Keys must be securely exchanged or made available to authorized systems and users without interception or compromise. This may involve methods of secure key exchange such as Diffie-Hellman, PKI, or out-of-band delivery.

Key Storage: One of the most critical aspects of securing a cryptographic system. Two types of systems used for key storage include Trusted Platform Module (TPM) and Hardware Security Module (HSM).

Key Rotation: Keys should be rotated periodically with newly generated keys to limit their lifespan and minimize the risk of compromise.

Key Escrow: Storing cryptographic keys with a trusted third party, known as an escrow agent, under a set of predefined conditions. This arrangement ensures that encrypted data remains accessible even if the original key holder is unavailable, loses their key, or is subject to a court order.

Key Revocation: If a key is known or suspected to be compromised, it must be revoked immediately and permanently to prevent further use. This includes using certification revocation via CRLs or OCSP.

Key Destruction: When a key and its associated data are no longer needed for any purpose, the key must be securely and permanently destroyed.

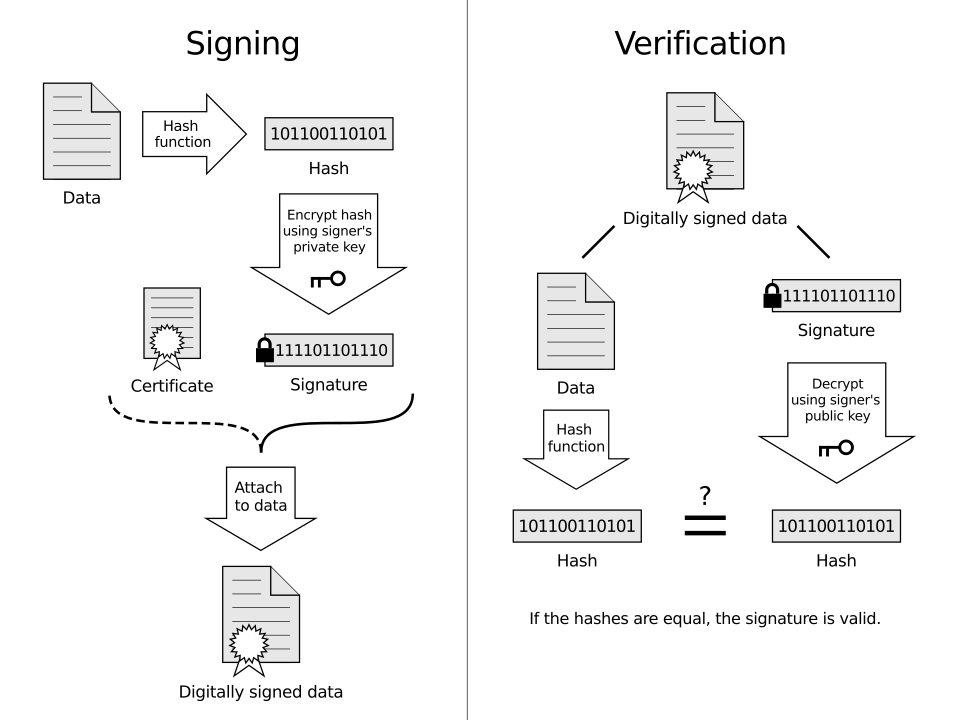

Digital Signatures

A digital signature is a cryptographic technique used to authenticate the identity of a sender and ensure the integrity of a digital message or document. Based on public-key infrastructure (PKI), it is significantly more secure and legally binding than a simple electronic signature.

A digital signature relies on a cryptographic process that uses a one-way hash function and a public/private key pair.

The signing process

Hashing the document: The sender runs the document or message through a one-way cryptographic hash function. This creates a unique, fixed-length “fingerprint” of the data called a hash or message digest. Even a single-character change to the document will completely alter this hash.

Encrypting the hash: The sender encrypts the hash value using their own private key. The result of this encryption is the digital signature.

Attaching the signature: The digital signature is then attached to the original document and sent to the recipient.

The verification process

Recipient’s hash: The recipient receives the document and its attached digital signature. They run the original document through the same hash function used by the sender to generate their own new hash.

Decrypting the signature: The recipient uses the sender’s public key to decrypt the digital signature received with the document.

Comparing the hashes: The recipient’s software compares the newly generated hash with the decrypted hash from the sender.

In part three, we’ll continue through Domain 3, and objectives 3.7 forward. If you’re on the CISSP journey, keep studying and connecting the dots, and let me know if you have any questions about this domain.